Blog on the topic of assistive technology, eLearning, mind mapping, project management, visual learning, collaborative tools, and educational technology

- Export to Mindjet Player

- FastTrack Schedule 9.2

- Flash video

- Flipnotebook

- Fly_Fusion

- Fly_Pentop

- Forms

- Gantt

- Gantt Charts

- Gideon King

- Ginger Software

- Glance

- Google Apps

- Google Presentation

- IBM

- MindView 3 BE

- Mindjet Connect

- elearning

- eye-fi

- file storage

- friedlander

- handwriting recognition

- hovercam T3

- inspiredata_1.5 videos

Saturday, September 25, 2010

Guess What, My New Word Processor is a Pen

Wednesday, September 22, 2010

Have you met ROI and TCO? / Já conhece o ROI e o TCO?

Este artigo está escrito em Inglês e Português

English Version:

ROI and TCO are two well known IT buzzwords. They mean Return On Investment and Total Cost of Ownership (check the links for more technical descriptions). The first one tries to measure how much you earn or lose by investing on something (software product or solution in IT world). The second one tries to be a measure of the sum of costs you have when you decide to buy and use a software product or solution. For the technical staff these two measures are not the most important aspects. Usually we just like or dislike a product period!

But the decisions and choices usually aren't done by technical staff. Today the decisions are taken by people who have tight budgets and that need to show to corporate management that they are using the company's money properly. In other words, people who do and must care about TCO and ROI.

And many times we hear that Informix is nice technically (easy to work with, robust, scalable etc.), but that it's hard to convince higher management to use it (there are still ghosts from the past and FUD spread by competition).

Well, now we have two consulting firms studies precisely about these two topics.

The first comes from Forrester Consulting and analyses the costs, benefits and ROI of implementing Informix. Needless to say it concludes that you actually can save money while investing in Informix, and it explains why. The study was based on a real company.

The second come from ITG group and compares the TCO of Informix vs Microsoft SQL Server for mid sized companies. It's based on a survey with ten's of participants and the conclusions are, as you might expect, favorable to Informix (important to note that MS SQL Server has a reputation of being "economic", so we could let us think that it would be even better for other competitors)

The studies can be freely obtained from IBM website, and as usual you need to fill some data. I know, I know.... this is always annoying and we could arguably consider that this should be only a link to allow quick access to that, but honestly it will take one minute to fill the form, and you have the usual option to request that you won't be bothered (no emails, phone calls etc.)

Don't let it discourage you. The studies are well worth the effort. Their URLs are:

- A Forrester Total Economic Impact Study:

https://www14.software.ibm.com/webapp/iwm/web/signup.do?source=sw-infomgt&S_PKG=infmx-forrester-ap - ITG analyst paper: Cost/Benefit case for IBM Informix Compared to Microsoft SQL Server for midsize organizations:

https://www14.software.ibm.com/webapp/iwm/web/signup.do?source=sw-infomgt&S_PKG=infmx-itg-report

Versão Portuguesa:

ROI e TCO são duas palavras muito em voga no mundo das TI. Qurem dizer Return On Investment (Retorno do investimento) e Total Cost of Ownership (Custo total da posse) (verifique os links para explicações mais técnicas - e ainda mais detalhadas na versão Inglesa). O primeiro tenta medir quanto se ganha ou perde ao investir em algo (produto ou solução de software no mundo das TI). O segundo tenta ser uma medida da soma de custos que teremos quando decidimos usar um produto ou solução de software. Para o pessoal técnico estas medidas não são os aspectos mais importantes. Normalmente nós apenas gostamos ou não e ponto final!

Mas as decisões e escolhas normalmente não são feitas pelo pessoal técnico. Hoje em dia as decisões são tomadas por pessoas que têm orçamentos apertados e que sentem a necessidade de mostrar à administração das empresas que estão a usar o dinheiro da companhia criteriosamente. Por outras palavras, pessoas que se preocupam e devem preocupar com o TCO e ROI.

E muitas vezes ouvimos dizer que o Informix é muito bom tecnicamente (simples, fácil de usar, fiável etc.), mas que é difícil convencer a gestão a usá-lo (ainda existem fantasmas do passado e FUD espalhado pela concorrência)-

Bom, mas agora temos dois estudos de duas empresas de consultoria precisamente sobre estes tópicos.

O primeiro vem da Forrester Consulting e analisa os custos, benefícios e ROI de implementar Informix. Escusado será dizer que concluí que na realidade estamos a poupar dinheiro ao investir em Informix, e explica porquê. O estudo foi baseado num caso real.

O segundo vem da ITG Group e compara o TCO do Informix vs Microsoft SQL Server para médias empresas. É baseado num inquérito com dezenas de participantes e as conclusões são, como seria de esperar, favoráveis ao Informix (é importante notar que o MS SQL Server tem a reputação de ser "económico", por isso poderíamos deixar-nos pensar que seria ainda mais favorável comparando com outros concorrentes)

Os estudos podem ser obtidos gratuitamente no website da IBM, e como é habitual necessitamos de preencher alguns dados. Sim, eu sei, eu sei.... Isto é sempre irritante e é discutível de não deveria ser apenas uma questão de clicar num link para aceder aos estudos, mas honestamente não demora mais de um minuto a preencher o formulário e tem as opções habituais para que não sejamos incomodados (nada de emails ou chamadas telefónicas etc.)

Não deixe que isso o desencorage. Os estudos merecem bem este pequeno esforço. Os seus endereços são:

- A Forrester Total Economic Impact Study:

https://www14.software.ibm.com/webapp/iwm/web/signup.do?source=sw-infomgt&S_PKG=infmx-forrester-ap - ITG analyst paper: Cost/Benefit case for IBM Informix Compared to Microsoft SQL Server for midsize organizations:

https://www14.software.ibm.com/webapp/iwm/web/signup.do?source=sw-infomgt&S_PKG=infmx-itg-report

Monday, September 20, 2010

MagicYad- The Bar & Bat Mitzvah Toolkit

MagicYAD is a very clever and easy to use application that really highlights the strengths of the Livescribe pen based computer technology. When students order MagicYAD they are provided with a complete print out of their Torah portion and the blessings which are printed in Hebrew on the Livescribe dotted paper. Each section of the Torah portion is marked off by an underscore which can be used to read that portion. To read a section students simply tap the pen on the underscore in the Torah portion and are able to hear the Cantor recite the portion. It is really that easy and the fidelity and sound is really fantastic. The beauty of the MagicYad solution is in its simplicity - tap the section you want to hear with your Livescribe pen and listen to the portion being read. Taking it one step further, students can click on the Record button on the bottom of the dotted paper and practice chanting the section and then compare that against the Cantor's recitation by clicking on the Teacher button.. Quite ingenious if you ask me.

MagicYad is certainly a valuable tool for anyone who is learning to read Torah but it can be especially important for student with special needs who may need the multi-sensory approach to learning their portion. MagicYad is easy to use and students will find it a wonderful resource when learning how to chant. Students can learn their portion independently and practice the sections they need to very quickly. For that matter it is an ideal tool for adults who are learning how to chant and read Hebrew. The real take away is that MagicYad will give students the confidence they need to learn how to read their Torah and Haftorah portions and will prepare them for the big day. If you want to see how it works click on this link. For more information about MagicYad click on this link.

Sunday, September 19, 2010

PaperShow for Teachers Primary Notebook Paper

Similarly teachers could trace the letters on the PaperShow for Teachers Primary Notebook before hand and have students practice their penmanship, right on the notebook page. Using this technique teachers can also capture the students handwriting sample, and save it as a PDF in the student's portfolio. To get the students involved in the handwriting lesson simply pass the PaperShow for Teachers Primary Notebook around the class with the pen and have students write the letters. Remember that the Canson Digital Pen has about a 30 foot range from the USB dongle so it is possible to walk around the room using this solution. No need for you to be tied to the front of the classroom.

In my next post I will share with you how to use the PaperShow for Teachers Interactive Paper to design writing templates that would allow students to practice their handwriting on pre-printed pages.

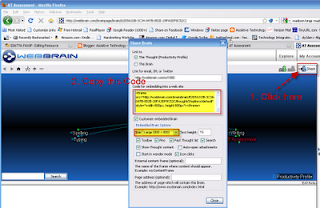

PersonalBrain 6 and Moodle Perfect Together

Over the years I have been a big fan of the PersonalBrain and having used the PersonalBrain 6 Beta Release, I am really impressed with the feature set and the direction of the product. I have used the PersonalBrain as a way to store and easily find information that is important in the work that I do in the field of assistive and educational technology. The PersonalBrain is a great way to visually store information and see the relationships of the data and thoughts that are embedded in your brain. I particularly like the fact that the PersonalBrain is a flexible tool and one that never locks you into a corner. If you want to keep your PersonalBrain structured by all means do so, but if you want less structure just feel free to drop information into your brain and everything is automatically indexed. Using the Search feature or the Advanced Reporting feature allows you to have the information you need at the tip of your fingers.

The PersonalBrain 6 is very tightly integrated with the WebBrain making it very easy to synchronize your brains to the web and back them up. I was particularly interested in the embedding function since my college is in the process of moving our Learning Management System over to Moodle. The question I had, would it be possible to embed one of my brains into Moodle so that students could visually search the database and use it as part of one of me courses? One of the things that I really like about Moodle is how it handles embedding code. I have successfully pasted embedding code from other sources and was able to render videos and flash objects without a hitch. I decided to see how well the embedding code worked and if I could integrate a Webbrain into one of my Moodle courses. This was a lot easier to do than you would think and within minutes I had my Webbrain working with my Moodle course.

I'm sure you want to know how I did it so here are the steps.

- Log into your account at WeBbrain.com and open the brain you would like to embed.

- Click on the Share icon on the top right of the Webbrain screen.

- Copy the Embedding Code from the dialog box

- Open your course in Moodle and Click on Compose a Webpage

- Name the Resource and then Click on the icon <> Toogle HTML Source in the Compose a Web page section and past the embedding code

- Save the page and watch how your WeBbrain is automatically rendered

Tuesday, September 14, 2010

Text-to-Speech vs Human Narration for eLearning

Some challenging questions are being raised in this month’s Big Question - Voice Over in eLearning. Some of the key questions:

- Given the range of solutions for voice-over from text-to-speech, home-grown human voice-over, professional voice-over: how do you decide what's right for your course?

- How do you justify the budget and how does that factor into your choice of solution?

- Are there places where text-to-speech makes sense?

This post is part of the series on Text-to-Speech (TTS) for eLearning written by Dr. Joel Harband and edited by me. The other posts are: Text-to-Speech Overview and NLP Quality, Digital Signal Processor and Text-to-Speech, Using Text-to-Speech in an eLearning Course, and Text-to-Speech eLearning Tools - Integrated Products.

We attacked these questions a little differently than the big question. We particularly focused on:

- Why use Text to Speech (TTS)?

- Why not use human voice-over? Or just use text on the screen?

- How will the quality of the voice affect the quality of the learning? How will the students accept the voices?

To best answer these questions, we asked professionals who have had actual experience in the field: people that have produced eLearning courses with text to speech tools (Speech-Over Professional) and have received feedback from learners.

You can think of this as four case studies of Text-to-Speech. The case studies come from:

- Case Study A. Company-wide training modules by an IT Process & Quality Manager at a Large Global Communications Corporation.

- Case Study B. Global web training by a Systems Engineering Manager at a Large Product Corporation.

- Case Study C. Company-wide training modules by a Lead Courseware Developer at a Security Products Corporation.

- Case Study D. Support for live presentations by a Process Design Consultant.

Why Use Text-to-Speech?

There were a range of answers to the question:

A. Our company has a prior background in TTS - our phones use TTS - and we've tried TTS before for training. This time it is succeeding because of the price, the voice quality, and the integration with PPT. I think it will only get better with time.

The reasons we use TTS are three fold:

* E-learning with voice-over is a preferred training approach within our company. This allows for people to take their training at their own pace; when and where they want to take it. Voice over is very helpful for our associates that English is not their first language.

* Using human voices makes it more difficult to create and maintain the training. Only a few people have the quality voice with minimal accent to perform the recordings. This creates a resource constraint for the creation and maintenance of e-learning material. Usually, the e-learning was out-of-date with the subject of the training and quickly became obsolete.* Voice over, especially computer voice, has proven to be helpful to associates that English is not their first language. The computerized voice is more consistent in pronunciation and speaks at a more steady pace. Thus, allowing people to understand the material more easily.

B. It offers a significant advantage over other methods of providing audio with PowerPoint.

C. We were looking for something that provided us with a short production and turn-around time, that our small development team could do in-house. Something easy to edit and change on the fly, without having to send it out, or schedule lengthy voiceover work.

These responses echo what we generally expect, Text-to-Speech offers a solution that is much faster to produce and 100x faster to modify as changes occur. This means a faster time-to-market and lower cost than human narration. There are obviously ways to keep human voice-over costs down by using in-house talent, but it still takes significantly more time. And it’s especially true when changes occur.

If you think about a simple spectrum of solutions:

| Text on Screen No Voice-Over | Text-to-Speech Narration | Human Narration In-House | Human Narration Professional |

| Lowest Cost Fastest | Highest Cost Slowest |

Certainly there’s a balance to be found. We’ll consider other factors below.

I thought the response from Case Study D was particularly interesting:

D. Initially, I experimented with TTS as a way to add content to a presentation that I as a presenter could use to refresh myself before presenting. I found that the act of adding TTS made me aware of a number of design issues with the presentation. Then I thought: wouldn't this be great as a way for participants to refresh their knowledge after the training.

One of the recommendations around the use of Text-to-Speech is that it’s used as part of any course that eventually will be recorded by Humans to prepare the script as part of authoring. That way, you have a good idea what it will sound like once it’s recorded. In this case, they were using Text-to-Speech to prepare themselves for a presentation. But instead of recording themselves against the slides, they used TTS. They could easily listen to their script. That’s actually a fantastic idea. And it led this person to eventually use the TTS as the basis of creating courses that could be used after the training sessions.

Why didn't you use human voice-over?

Obviously, cost and time are a major factor here. But a lot of the specific reasons have more to do with a hassle factor of using voice talent. Here were the responses:

A. Mainly for updating where I don't have to look for the original voice talent who can now charge more. We don't have voice talent available internally.

B. Publishing a straight recording keeps all of the errors of the subject matter expert, speaking too fast, low sound quality, running on or off topic. Maintaining the recorded voice requires an entire rerecording and production where TTS is much simpler.

C. For our first project, we did use human voice-over as well as text. We found that the added production time, and having to schedule around voice over, plus re-doing entire segments for one small correction, to get the sound to match, was prohibitive both cost and time-wise.

D. I don't have a particularly great voice for adding to the slide so that's a factor. But the other factor is that it's 100 times easier to change text than re-record speech. Even if I were to record speech I would first do a TTS and then only after I believed it to be final, might I record.

Anyone who has used in-house or professional talent knows about the hassle factor of getting things done. You often find yourself not doing retakes when something is wrong or there are changes just because it’s too much work. Even when you do your own voice-over, there’s still more time involved. So adding to the spectrum above:

| Text on Screen No Voice-Over | Text-to-Speech Narration | Human Narration In-House | Human Narration Professional |

| Lowest Cost Fastest Easy to Change Lowest Hassle | Highest Cost Slowest Hard to Change Biggest Hassle |

Why didn’t you just use Text on Screen?

I think some of the other responses to the Big Question address this much better – why use voice-over at all? But a couple of the reasons from these case studies have to do with providing support to ESL learners:

B. We asked our students which helps them learn; subtitles only or subtitles with speech. They agreed that subtitles with speech are better. English as second language students even said it helped them learn English.

C. Since our training modules are used world-wide, in English, we wanted voice as well as text (all our training modules have both). Many foreign students have much better vocal/listening comprehension vs. just reading comprehension, if English is not their first language, so having voice as well as text was important to us.

I would highly recommend looking at some of the specific answers to Voice Over in eLearning that talk to issues of when to use voice-over in eLearning. For example:

- Learning environment – some environments audio is not good. In other cases, it’s great to have audio to add engagement.

- To support graphics or animations on screen – large amounts of text would be distracting.

I will caution you that some of the responses suggest that Voice-Over roughly equates to slower learning with no improved effect; and limits your cultural appeal.

There’s also some suggestion that the script should be available with a mute button to be read by learners who prefer that modality. I would claim this would definitely argue for Text-to-Speech.

Others argue that to capture emotion and to engage, voice-over is very important.

So, my spectrum table becomes woefully inadequate to capture all of this. Anyone want to take a shot?

Concerns About Quality?

In each case, there was concern about quality, but the result was good enough, especially with caveats to be used. I think the responses speak (pardon the pun) for themselves.

A. For many English speaking associates, the computerized voice can be very boring and mundane. When we researched TTS about 5 years ago the higher quality voices were too expensive. Today, those same voices are much less expensive and have broken that barrier of being too "computerish". Training the voices is an important issue. The support provided by Speech-Over for modulation and pronunciation is good.

B. We were concerned that it would be too mechanized sounding. It turned out not to be and was well accepted by students.

C. Yes, we were concerned that the slight robotic cadence might detract from the training, just because it does not come out completely natural all the time. The Paul voice is very good, but still recognizable as mechanical. To counter this, we put a statement up front in our training introduction about the narration being computer generated, so an awareness and expectation of this is set with the students before they even begin the training. With this disclaimer in place, we have had no complaints at all about the "voice" in the presentations, and our technical training modules using this TTS have been successfully taken by hundreds of students world-wide as part of their technical service training with us. As we worked with the TTS, we quickly developed a style of writing the scripts that really worked well with TTS, and minimizes the difference between using a computer generated voice, vs. human voice-over. In fact, we received complaints about our first human voice-over training for a few pronunciation gaffs, and some pacing issues, where we have received none at all on our subsequent TTS developed training modules.

D. The voice quality is extremely important. As soon as people hear what sounds like a robot voice they tend to immediately believe the presentation to be cheap like the voice. So voice quality is the key. The current voices although very good are more monotonous than a human voice. I know that there are some tools for changing Paul's voice, for example, but I haven't tried them.

Results? Acceptance by Students?

Again, the responses are somewhat self-evident:

A. Yes. The TTS technology coupled with the software allowed us to create e-learning material in about half the time as human voice over. The maintenance of the e-learning material takes 75% less time than maintaining material with human voice over. This allows us to create and maintain material much faster with less resources and without needing specialized resources that have voices specialized for recording.

We have produced courses for 6000 people in the company and we are getting good feedback: 80% are satisfied, 10% love it and 10% feel offended. My conclusion is that the voices are "good enough" for training applications.

B. Yes. It actually helped us reduce the length of training by having the subject matter experts edit their transcripts and eliminate extra unnecessary speech.

C. Yes and more. The ease of converting the text to voice, coupled with the ability to go back and instantly change / edit / correct narration on a single slide, and have it exactly match the voice, volume, timber, etc. of every other slide, recorded days or weeks or months earlier is invaluable. Short technical/repair training modules that took us a month or more to develop and schedule voice-over and re-voice-over to correct and edit, now literally take us just days to develop start to finish, right on the desktop. Acceptance by the students has been 100%. All the students taking our TTS based training are required to pass a Certification test after they complete those training modules. Our first-time pass rates are identical for our earlier human voice-over training, vs. our current TTS based training - so if outcomes are the measure, for us, there is no difference between the two as far as their functional performance, and the Return On Investment is much higher for us with the TTS. In surveying students who completed our TTS based training, they all said the same thing, that at first it was a bit different, being computer generated narration, but after they were into the training their ear became tuned to the voice, and it really wasn't any different than listening to someone talk who had a particular regional or foreign accent to their speech.

The comment about learners getting used to the voice is interesting. I think putting a caveat up front and then learners getting used to the voice is an important take-away.

Summary

Obviously, there are complex questions around the use of voice-over at all. These are hard to capture in the simple kind of spectrum table that I attempted above. Some specific things that jump out at me:

- The TTS voice quality was acceptable for eLearning applications and did not detract from learning effectiveness.

- High Emotion - Clearly if you have sensitive material with high emotion, likely using actual voices (key executives or employees) might be best. Professional talent can also help with this.

- Text-to-speech accelerates development time vs. human voice-over. And maintaining the voice is possible.

- Much of the comparison of Text-to-speech vs. Human narration focuses on the hassle factor more than cost.

- Text-to-speech makes it easy to keep the material up-to-date and accurate vs human recordings that can become obsolete and would need to be re-recorded.

- Caveat Text-to-Speech – Put a note up front so that learners are more open to the voice.

- Use Text-to-Speech to prepare your scripts

- If you expect change, don’t use human narration

I welcome your thoughts and comments.

E-book Popularity Skyrockets

The popularity of e-books grows every day, and writers who never would

have considered writing for an e-book publisher even a year ago are

changing their minds. With online bookstores and publishers reporting

increasing numbers of e-book sales every month, with the popularity of

e-book readers, it makes sense to take advantage of this market.

And in the last year or so, even traditional print publishing houses

have begun to recognize the growing popularity of Ebooks. Many are

implementing digital programs of their own for new releases as well as

their backlists. No longer do those who prefer to read online or on an

e-book reader have to restrict themselves to small online publishers

or bookstores. The variety and availability of books that are

digitally published is expanding rapidly.

In fact, the Association of American Publishers recently reported an

amazing increase in the number of e-book sales in the U.S. According

to the AAP, gross Ebook sales in June 2010 reached $29.9 million.

That’s an amazing 118.9% increase in sales from the same month in

2009. That’s a huge difference and it shows no sign of slowing down

any time soon. In fact, while everyone agrees that paperback and

hardback books will never completely disappear, it isn’t beyond belief

that all books will be available in digital format in the coming

years.

Have you tried reading a book electronically? If not, you should

definitely check one out. E-books are written, edited, and published

to the same standards as print books, and you’re sure to find

something you like. No matter what genre of fiction you enjoy, or what

subject of nonfiction appeals to you, look for a book online and give

it a try.

About the author: James Mowery is a computer geek that writes about

technology and related topics. To read more blog posts by him, go to

http://www.ledtv.org

Monday, September 13, 2010

Livescribe Releases Pencast Player App for the iPad

Sunday, September 12, 2010

SPARKvue for the iPad from Pasco

With the start of the semester I have been busy preparing for my classes but still have some reviews in the hopper that I have been meaning to post for some time. This past summer I had a chance to speak with Wayne Grant, Ph.D., Chief Education Officer at Pasco, a leading science education company located in California about the release of SPARvue for the iPad. Pasco has been known for their high quality science probes and software for some time now and are leading proponents of mobile solutions to help make science education come alive in the classroom. Dr. Grant was kind enough to show me the latest release of SPARKvue a software app that runs on the iPad that can teamed up with various probes so that students can collect data in real time.

Using the PASSPORT Airlink 2 students can collect real time data on the Ipad without the need to be directly connected. The PASSPORT Airlink 2 uses Bluetooth technology to automatically send data from the sensors to the iPad for instant visual analysis. I had a chance to work with the PASSPORT Airlink 2 and one of Pasco's Weather sensors to see what it would be like to use, to collect data. Pairing the Bluetooth PASSPORT Airlink 2 with the iPad was a cinch and within seconds after plugging in the Weather probe I was collecting data about the humidity in New Jersey. Based on my experience I would say that the PASSPORT Airlink 2 has good range and I was able to collect data from at least 20to 25 feet away from my iPad. It was really exciting to be able to see the data streaming live to my iPad using the PASSPORT Airlink 2 and the Weather probe. Pasco should be commended for designing such and elegant solution that can be used with the iPad to give students hands on opportunity to collect data in real time. Pasco once again has shown their ingenuity and innovation in the design and implementation of this device. If you would like to try out the SPARKvue app just go to the Apple Apps Store and download to see how it works.

Friday, September 10, 2010

Free ARRA Webinar Sponsored by Don Johnston

Free ARRA Webinar: A year into ARRA funding, and only a year left.

Now what?

Two dates: September 22 & September 29With an additional $100,000 left in ARRA funding per school, even just 1-2% can have an impact!

Join us for this one-hour webinar presented by Brian S. Friedlander, Ph.D. Dr. Friedlander will share stories of districts in New Jersey who have used ARRA funding in creative ways to build an AT infrastructure and implement it.

Join us for this one-hour webinar presented by Brian S. Friedlander, Ph.D. Dr. Friedlander will share stories of districts in New Jersey who have used ARRA funding in creative ways to build an AT infrastructure and implement it. The webinar will focus on these questions:

- What are some of the misconceptions about ARRA funding?

- How can I advocate for ARRA funding?

- What has worked for other districts?

- What kind of implementation plan should I present to my special ed director?

- Once I have the funding, how can I use it most effectively?

Sign Up Now!

Tuesday, September 7, 2010

Let's optimize / Vamos optimizar

Este artigo está escrito em Inglês e Português

English version.

In this article I'll show you a curious situation related to the Informix query optimizer. There are several reasons to do this. First, this is a curious behavior which by itself deserves a few lines. Another reason is that I truly admire the people who write this stuff... I did and I still do some programming, but my previous experience was related to business applications and currently it's mostly scripts, small utilities, stored procedures etc. I don't mean to offend anyone, but I honestly believe these two programming worlds are on completely different leagues. All this to say that a query optimizer is something admirable given the complexity of the issues it has to solve.

I also have an empiric view or impression regarding several query optimizers in the database market. This impression comes from my personal experience, my contact with professionals who work with other technologies and some readings... Basically I think one of our biggest competitors has an optimizer with inconstant behavior, difficult to understand and not very trustworthy. One of the other IBM relational databases, in this case DB2, has probably the most "intelligent" optimizer, but I have nearly no experience with it. As for Informix, I consider it not the most sophisticated (lot of improvements in V10 and V11, and more to come in Panther), but I really love it for being robust. Typically it just needs statistics. I think I only used or recommended directives two times since I work with Informix. And I believe I never personally hit an optimizer bug (and if you check the fix lists in each fixpack you'll see some of them there...)

To end the introduction I'd like to add that one of my usual tasks is query optimizing... So I did catch a few interesting situations. As I wrote above, typically an UPDATE statistics solves the problem. A few times I saw situations where I noticed optimizer limitations (things could work better if it could solve the query another way), or even engine limitations (the query plan is good, but the execution takes unnecessary time to complete). The following situation was brought to my attention by a customer who was looking around queries using a specific table which had a reasonable number of sequential scans.... Let's see the table schema first:

create table example_table

(

et_id serial not null ,

et_ts datetime year to second,

et_col1 smallint,

et_col2 integer,

et_var1 varchar(50),

et_var2 varchar(50),

et_var3 varchar(50),

et_var4 varchar(50),

et_var5 varchar(50),

primary key (et_id)

)

lock mode row;

create index ids_example_table_1 on example_table (et_timestamp) using btree in dbs1

And then two query plans they manage to obtain:

QUERY: (OPTIMIZATION TIMESTAMP: 08-25-2010 12:31:56)

------

SELECT et_ts, et_col2

FROM example_table WHERE et_var2='LessCommonValue'

AND et_col1 = 1 ORDER BY et_ts DESC

Estimated Cost: 5439

Estimated # of Rows Returned: 684

Temporary Files Required For: Order By

1) informix.example_table: SEQUENTIAL SCAN

Filters: (informix.example_table.et_var2 = 'LessCommonValue' AND informix.example_table.et_col1 = 1 )

So this was the query responsible for their sequential scans. Nothing special. The conditions are on non indexed fields. So no index is used. It includes an ORDER BY clause, so a temporary file to sort is needed (actually it can be done in memory, but the plan means that a sort operation has to be done).

So, nothing noticeable here... But let's check another query plan on the same table. In fact, the query is almost the same:

QUERY: (OPTIMIZATION TIMESTAMP: 08-25-2010 12:28:32)

------

SELECT et_ts, et_col2

FROM example_table WHERE et_var2='MoreCommonValue'

AND et_col1 = 1 ORDER BY et_ts DESC

Estimated Cost: 5915

Estimated # of Rows Returned: 2384

1) informix.example_table: INDEX PATH

Filters: (informix.example_table.et_var2 = 'MoreCommonValue' AND informix.example_table.et_col1 = 1 )

(1) Index Name: informix.ids_example_table_1

Index Keys: et_ts (Serial, fragments: ALL)

Ok... It's almost the same query, but this time we have an INDEX path.... Wait... Didn't I wrote that the conditions where not on indexed column(s)? Yes. Another difference: It does not need a temporary file for sort (meaning that it's not doing a sort operation). Also note that there are no filter applied on the index. So, in this case it's doing a completely different thing than on the previous situation. It reads the index (the whole index), accesses the rows (so it gets them already ordered) and then applies the filters discarding the rows that do not match. It does that because it wants to avoid the sort operation. But why does it change the behavior? You might have noticed that I already included a clue about this. To protect data privacy, I changed the table name, table fields and the query values. And in the first query I used "LessCommonValue" and on the second I used "MoreCommonValue". So as you might expect, the value on the second query is more frequent in the table than the value of the first query. How do we know that? First, by looking at the number of estimated rows returned (684 versus 2384). How does it predict this values? Because the table has statistics, and in particular column distribution. Here are them for the et_var2 column (taken with dbschema -hd):

Distribution for informix.example_table.et_var2

Constructed on 2010-08-25 12:19:04.31216

Medium Mode, 2.500000 Resolution, 0.950000 Confidence

--- DISTRIBUTION ---

( AnotherValue )

--- OVERFLOW ---

1: ( 4347, Value_1 )

2: ( 4231, Value_2 )

3: ( 3129, MoreCommonValue )

4: ( 2840, Value_4 )

5: ( 2405, Value_5 )

6: ( 3854, Value_6 )

7: ( 3941, Value_7 )

8: ( 2086, Value_8 )

9: ( 4086, Value_9 )

10: ( 4144, Value_10 )

11: ( 4086, Value_11 )

12: ( 2666, Value_12 )

13: ( 2869, Value_13 )

14: ( 3245, Value_14 )

15: ( 2811, Value_15 )

16: ( 3419, Value_16 )

17: ( 2637, Value_17 )

18: ( 3187, Value_18 )

19: ( 4347, Value_19 )

20: ( 898, LessCommonValue )

21: ( 4144, Value_20 )

22: ( 2260, Value_21 )

23: ( 3999, Value_22 )

24: ( 1797, Value_23 )

25: ( 2115, Value_24 )

26: ( 2173, Value_25 )

27: ( 4144, Value_26 )

If you're not used to look at this output I'll describe it. The distributions are showed as a list of "bins". Each bin, except the first one, contains 3 columns: The number of record it represents, the number of unique values within that bin, and the highest value within that bin. The above is not a good example because the "normal" bin values has only one (AnotherValue) and given it's the first one it means it's the "lowest" value in the column. Let's see an example from the dbschema description in the migration guide:

( 5)

1: ( 16, 7, 11)

2: ( 16, 6, 17)

3: ( 16, 8, 25)

4: ( 16, 8, 38)

5: ( 16, 7, 52)

6: ( 16, 8, 73)

7: ( 16, 12, 95)

8: ( 16, 12, 139)

9: ( 16, 11, 182)

10: ( 10, 5, 200)

Ok. So, looking at this we could see that the lowest value is 5, and that between 5 and 11 we have 16 values, 7 of them are unique. Between 11 and 17 we have 6 unique values etc.

Going back to our example we see an "OVERFLOW" section. This is created when there are highly repeated values that would skew the distributions. In these cases the repeated values are showed along the number of times they appear.

So, in our case, the values we're interested are "LessCommonValue" (898 times) and "MoreCommonValue" (3129 times). So with these evidences we can understand the decision to choose the INDEX PATH. When the engine gets to the end of the result set it's already ordered. And in this situation (more than 3000 rows) the order by would be much more expensive than for the other value (around 800 rows).

It's very arguable if the choice is effectively correct. But my point is that it cares... Meaning it goes deep in it's analysis, and that it takes into account many aspects, and is able to decide to use a query plan that it's not obvious (and choose it for good reasons).

Versão portuguesa:

Neste artigo vou mostrar uma situação curiosa relacionada com o optimizador de queries do Informix. Há várias razões para fazer isto. Primeiro porque é um comportamento interessante que por si só merece umas linhas. Outra razão é que eu admiro verdadeiramente as pessoas que escrevem este componente.... Eu fiz e ainda faço alguma programação, mas a minha experiência anterior estava relacionada com aplicações de negócio e actualmente é essencialmente scripts, pequenos utilitários, stored procedures etc. Não querendo ofender ninguém, mas honestamente acredito que estes dois mundos de programação estão em campeonatos completamente diferentes. Tudo isto para dizer que o optimizador é algo admirável dada a complexidade dos problemas que ele tem de resolver.

Também tenho uma visão empírica ou apenas uma impressão relativamente a alguns optimizadores no mercado de bases de dados. Esta impressão deriva da minha experiência pessoal, do meu contacto com profissionais que trabalham com outras tecnologias e de alguma leitura... Basicamente penso que o nosso maior competidor tem um optimizador com um comportamento insconstante, difícil de entender e pouco confiável. Uma das outras bases de dados relacionais da IBM, DB2 neste caso, tem provavelmente o optimizador mais "inteligente", mas não tenho practicamente nenhuma experiência com ele. No caso do Informix, considero que não é o mais sofisticado (muitas melhorias na versão 10 e 11, e mais no Panther), mas realmente adoro a sua robustez. Tipicamente apenas precisa de estatísticas. Julgo que só utilizei ou recomendei optimizer directives duas vezes desde que trabalho com Informix. E que me lembre nunca bati pessoalmente num bug do optimizador (embora baste consultar a lista de correcções de cada fixpack para sabermos que eles existem).

Para terminar esta introdução gostaria de referir que uma das minhas tarefas habituais é a optimização de queries. Por isso já encontrei algumas situações interessantes. Como escrevi acima, tipicamente um UPDATE STATISTICS resolve o problema. Algumas vezes encontrei limitações do optimizador (as coisas poderiam correr melhor se ele pudesse resolver as queries de outra maneira) e até limitações do motor (o plano de execução gerado é bom, mas a execução leva tempo desnecessário a correr). A situação que se segue chegou-me à atenção através de um cliente que estava a analisar queries sobre uma tabela que estava a sofrer muitos sequential scans.... Vejamos a definição da tabela primeiro:

create table example_table

(

et_id serial not null ,

et_ts datetime year to second,

et_col1 smallint,

et_col2 integer,

et_var1 varchar(50),

et_var2 varchar(50),

et_var3 varchar(50),

et_var4 varchar(50),

et_var5 varchar(50),

primary key (et_id)

)

lock mode row;

create index ids_example_table_1 on example_table (et_timestamp) using btree in dbs1

E agora dois planos de execução que obtiveram:

QUERY: (OPTIMIZATION TIMESTAMP: 08-25-2010 12:31:56)

------

SELECT et_ts, et_col2

FROM example_table WHERE et_var2='ValorMenosComum'

AND et_col1 = 1 ORDER BY et_ts DESC

Estimated Cost: 5439

Estimated # of Rows Returned: 684

Temporary Files Required For: Order By

1) informix.example_table: SEQUENTIAL SCAN

Filters: (informix.example_table.et_var2 = 'ValorMenosComum' AND informix.example_table.et_col1 = 1 )

Esta era uma das queries responsável pelos sequential scans. Nada de especial. As condições da query incidem em colunas não indexadas. Portanto não utiliza nenhum indíce. Inclui uma cláusula ORDER BY, por isso um necessita de "ficheiros" temporários para ordenação. (na verdade pode ser feito em memória, mas o plano quer dizer que precisa de fazer uma operação de ordenação).

Portanto nada a salientar aqui.... Mas vejamos o outro plano sobre a mesma tabela. Na verdade a query é muito semelhante:

QUERY: (OPTIMIZATION TIMESTAMP: 08-25-2010 12:28:32)

------

SELECT et_ts, et_col2

FROM example_table WHERE et_var2='ValorMaisComum'

AND et_col1 = 1 ORDER BY et_ts DESC

Estimated Cost: 5915

Estimated # of Rows Returned: 2384

1) informix.example_table: INDEX PATH

Filters: (informix.example_table.et_var2 = 'ValorMaisComum' AND informix.example_table.et_col1 = 1 )

(1) Index Name: informix.ids_example_table_1

Index Keys: et_ts (Serial, fragments: ALL)

Ok... É quase a mesma query, mas desta vez temos um INDEX PATH... Um momento!.... Não escrevi que as condições não indiciam sobre colunas indexadas? Sim. Outra diferença: Neste caso não necessita de "temporary files" (o que significa que não está a fazer ordenação). Note-se ainda que não existe nenhum filtro aplicado ao indíce. Portanto, neste caso está a fazer algo completamente diferente da situação anterior. Lê o indíce (todo o indíce), acede às linhas (assim obtém-nas já ordenadas) e depois aplica os filtros descartando as linhas que não verificam as condições. Faz isto porque pretende evitar a operação de ordenação. Mas porque é que muda o comportamento? Poderá ter reparado que já inclui uma pista sobre isto. Para proteger a privacidade dos dados mudei o nome da tabela, dos campos e dos valores das queries. E no primeiro caso utilizei "ValorMenosComum" e no segundo usei "ValorMaisComum". Portanto como se pode esperar, o valor da segunda query é mais frequente na tabela que o valor usado na primeira query. Como sabemos isso? Primeiro olhando para o valor estimado de número de linhas retornadas pela query (684 vs 2384). Como é que o optimizador prevê estes valores? Através das estatísticas da tabela, em particular as distribuições de cada coluna. Aqui estão as mesmas para a coluna et_var2 (obtidas com dbschema -hd):

Distribution for informix.example_table.et_var2

Constructed on 2010-08-25 12:19:04.31216

Medium Mode, 2.500000 Resolution, 0.950000 Confidence

--- DISTRIBUTION ---

( OutroValor )

--- OVERFLOW ---

1: ( 4347, Value_1 )

2: ( 4231, Value_2 )

3: ( 3129, ValorMaisComum )

4: ( 2840, Value_4 )

5: ( 2405, Value_5 )

6: ( 3854, Value_6 )

7: ( 3941, Value_7 )

8: ( 2086, Value_8 )

9: ( 4086, Value_9 )

10: ( 4144, Value_10 )

11: ( 4086, Value_11 )

12: ( 2666, Value_12 )

13: ( 2869, Value_13 )

14: ( 3245, Value_14 )

15: ( 2811, Value_15 )

16: ( 3419, Value_16 )

17: ( 2637, Value_17 )

18: ( 3187, Value_18 )

19: ( 4347, Value_19 )

20: ( 898, ValorMenosComum )

21: ( 4144, Value_20 )

22: ( 2260, Value_21 )

23: ( 3999, Value_22 )

24: ( 1797, Value_23 )

25: ( 2115, Value_24 )

26: ( 2173, Value_25 )

27: ( 4144, Value_26 )

Para o caso de não estar habituado a analisar esta informação vou fazer uma pequena descrição da mesma. As distribuições são mostradas como uma lista de "cestos". Cada cesto, excepto o primeiro, contém 3 colunas: O número de registo que representa, o número de valores únicos nesse cesto e o valor mais "alto" nesse cesto. O que está acima não é um bom exemplo porque a secção de "cestos" normal só tem um valor (OutroValor), e dado que é o primeiro apenas tem o valor, o que significa que é o valor mais "baixo" da coluna. Vejamos um exemplo retirado da descriçao do utilitário dbschema no manual de migração:

( 5)

1: ( 16, 7, 11)

2: ( 16, 6, 17)

3: ( 16, 8, 25)

4: ( 16, 8, 38)

5: ( 16, 7, 52)

6: ( 16, 8, 73)

7: ( 16, 12, 95)

8: ( 16, 12, 139)

9: ( 16, 11, 182)

10: ( 10, 5, 200)

Portanto olhando para isto podemos ver que o valor mais baixo é 5, que entre 5 e 11 temos 16 valores, 7 dos quais são únicos. Entre 11 e 17 temos 6 valores únicos etc.

Voltando à nossa situação concreta vemos uma secção de "OVERFLOW". Esta secção é criada quando há valores altamente repetidos que iriam deturpar ou desequilibrar as distribuições. Nestes casos os valores repetidos são mostrados lado a lado com o número de vezes que aparecem.

Assim, no nosso caso, estamos interessados nos valores "ValorMenosComum" (898 vezes) e "ValorMaisComum" (3129 vezes). Portanto, com estas evidências podemos perceber a decisão de escolher o INDEX PATH. Quando o motor chega ao final do conjunto de resultados o mesmo já está ordenado. E nesta situação (mais de 3000 linhas) a ordenação consumiria mais recursos qeu para a outra situação em análise (cerca de 800 linhas).

Pode ser muito discutivel se a decisão é efectivamente correcta. Mas o que quero salientar é que o optimizador se preocupa.... Ou seja, a análise que faz das condições e opções é profunda e é capaz de escolher um plano de execução que não é óbvio (e escolhê-lo por boas razões).