Just when you've made the transition from the prior generation of CBT authoring tools (e.g.,

Authorware,

Toolbook) to the new generation of WBT authoring tools (e.g.,

Captivate,

Lectora), it looks like things are slowly shifting again.

The shift I'm seeing is away from the design of pure "courseware" solutions

and much more to "reference hybrid" solutions.

To explain this, I need to step back and deal with the fact that terminology around eLearning Patterns is problematic.

In my mind, "courseware" is interactive (to some level) instruction run asynchronously. It is created via an Authoring Tool or an Learning Content Management System. Often there's period quizzing to test understanding. It's designed to hit particular instructional objectives. It's the stuff you see demonstrated at every Training conference over the past ten years. Oh, and it almost definitely has a NEXT BUTTON.

"Reference" is static content - meaning no interaction other than allowing the user to link from page-to-page and to search. It is asynchronous. It is normally a series of web pages, but can be PDF or other document types. It can be created using Wiki software, a content management system, web editing software or even Microsoft Word stored as HTML. It's designed to provide either real-time support for work tasks or near real-time support for look up. Often they are designed based around particular job functions and tasks to provide good on-the-job support. It almost certainly does not have a next button and should have search. You probably don't see many demonstrations of these kinds of solutions, because they aren't sexy.

I realize that these terms are vague, so let me go see what other people have to say. If you look at various eLearning Glossaries:

Wikipedia eLearning Glossary,

WorldWideLearn eLearning Glossary,

Cybermedia Creations eLearning Glossary, you'll find that there are hopeless definitions of "courseware" and no definition of "reference." Reference sometimes comes out as "job aids" or "online support" or "online help" or various other things. Each of these other terms in slightly more specific than "reference" as they generally imply a bit more about the specific structure of the content. Thus, "reference" to me is a good umbrella term.

By the way, if you can help me ... Am I missing an alterative term for "reference?" Are we just calling these "web pages?"

I did try another avenue to find better definitions. I went over to

Brandon Hall's Awards Site. He has awards for "custom content" organized by the type of function they were supporting, e.g., sales. I would think that "content" is an inclusive term for courseware and reference. However, if you look at the judging criteria the first question is: "How engaging is this entry?" So, I've got to assume they really are looking for courseware and not for reference material (which is inherently less engaging - and in fact you would claim that you don't want it to be engaging, you want it to be quick and to the point).

The other categories for Learning Technology. The sub-categories here are:

- Course Development Tools

- Software and System Simulation Development Tools

- Soft Skills and Technical Simulation Development Tools

- Tests or Instructional Games Creation Tools

- Rapid Content Creation Tools

- Live E-Learning/Virtual Classroom Technology

- Just-in-Time Learning Technology

- Innovations in Learner Management Technology

- Innovations in Learning Content Creation and Learning Content Management Technology

- Open

None of this makes me think about "reference," but maybe it would be included in either "Rapid Content Creation" or "Just-in-Time."

Okay, now that I'm done ranting about terminology, here's the real point ...

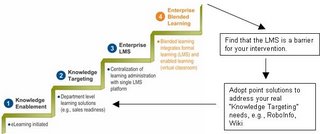

We are seeing a significant shift in development away from mostly creating courseware to creating more-and-more reference materials designed to be just-in-time support.

Even more so, we are seeing a shift towards Hybrid Reference and Courseware combinations where the Courseware is embedded within the Reference. So, if you don't quite understand the concept or you want to make sure you provide a nice introduction, you put that embedded within the web pages.

As an example of this, we've created several hybrid reference/courseware solutions that are designed to both introduce and support the use of software. Traditionally, we would have built courses in something like Captivate and pointed users to go take these courses first. We would have separately created a "support" site that would have a FAQ and help on various tasks.

In the hybrid solution, we created the support site as the first element you go to and put a prominent "First Time User" link on the home page. This page takes them to instructions on how to get up and going. Most of the content is presented as static web pages that tell how to perform particular tasks, but some of the pages contain embedded Captivate movies to demonstrate or simulate use of the system.

This design has given us several advantages:

- End-users can get started with the application quickly and receive incremental help on the use of the system as they need it. We've eliminated most up-front training.

- End-users only see one solution that provides "help on using the application" as opposed to seeing "training" and "support" separately.

- It costs less to produce because there's greater content sharing between training and support materials and because we build more of the content as reference which costs less.

I'll be curious to hear if other people are seeing a similar shift in what they are building.

Keywords:

eLearning Trends,

eLearning Resources